Docker-An open-source containerization platform

On this page

- Overview

- How does Docker work?

- Docker architecture

- Docker Compose

- Generate a dockerfile by the jk

- Tools

- References

- Examples

- .dockerignore

Overview

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker’s methodologies for shipping, testing, and deploying code quickly, you can significantly reduce the delay between writing code and running it in production.

Docker provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security allow you to run many containers simultaneously on a given host.

How does Docker work?

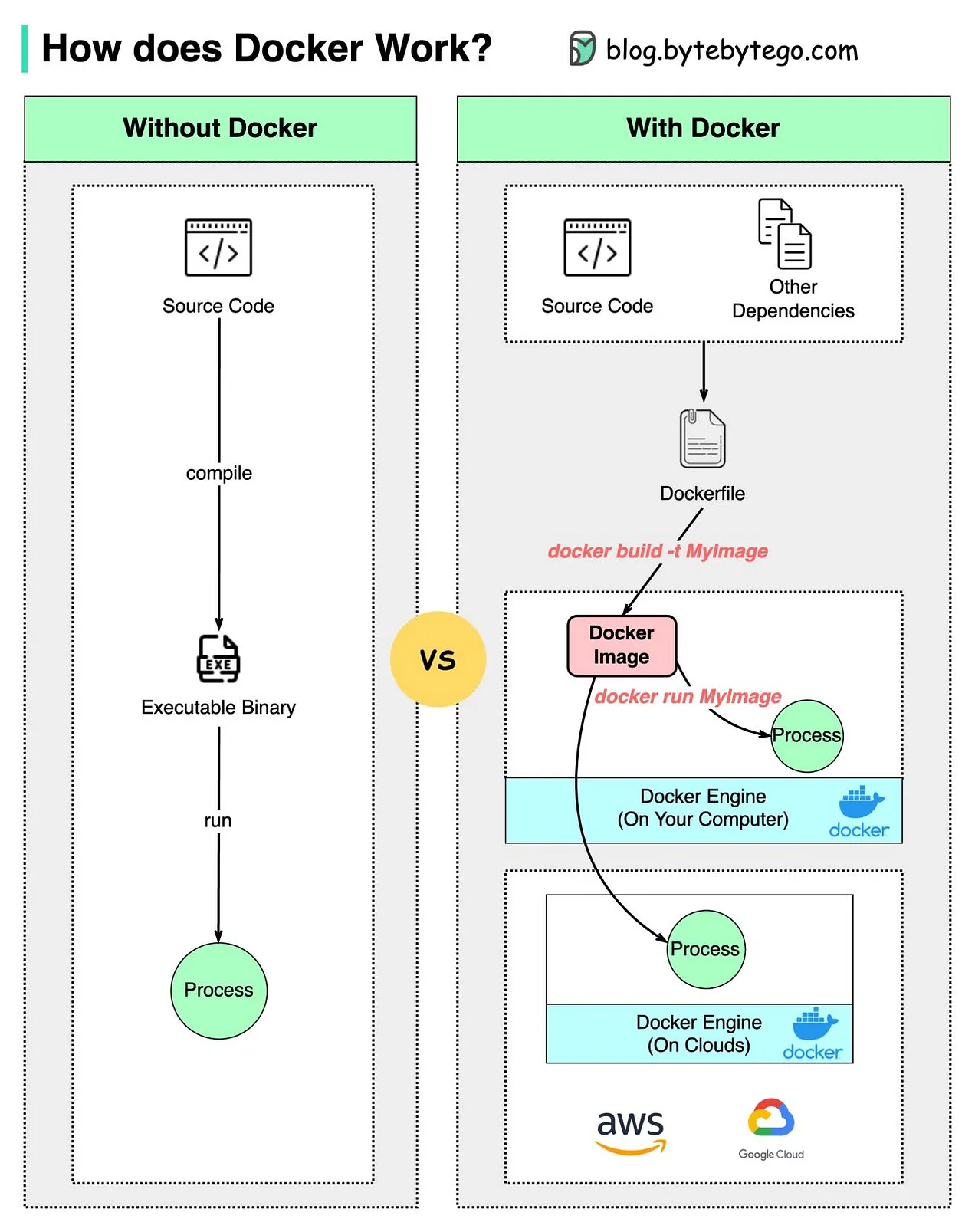

A comparison of Docker-based and non-Docker-based development is shown above. With Docker, we can develop, package, and run applications quickly.

- The developers can write code locally and then build a Docker image and push it to a dev environment.

- The above process can run incrementally when bugs are found or improvements are needed.

- When dev testing is complete, the Docker image is pushed to the production environment (often on the cloud).

Compared with traditional development without Docker, Docker is quite lightweight and fast, because only the changed part of Dockerfile is rebuilt every time we make a change.

Docker architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

Docker Compose

Compose is a tool for defining and running multi-container Docker applications in a single host. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. To learn more about all the features of Compose, see the list of features.

Compose works in all environments: production, staging, development, testing, as well as CI workflows. You can learn more about each case in Common Use Cases.

Using Compose is basically a three-step process:

Define your app’s environment with a Dockerfile so it can be reproduced anywhere.

Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment.

Run docker-compose up and Compose starts and runs your entire app.

A docker-compose.yml looks like this:

version: "3"services:app:build: .depends_on:- postgresqlenvironment:DATABASE_URL: postgres://user:pass@postgres:5432/dbNODE_ENV: developmentPORT: 3000ports:- "3000:3000"command: npm run devvolumes:- .:/app/- /app/node_modulespostgres:image: postgis/postgis:11-2.5-alpineenvironment:- POSTGRES_DB=sensorthings- POSTGRES_USER=sensorthings- POSTGRES_PASSWORD=ChangeMevolumes:- postgis_volume:/var/lib/postgresql/datavolumes:postgis_volume:

More information about the compose file.

Generate a dockerfile by the jk

import * as param from "@jkcfg/std/param";// input is the the 'service' input parameter.const input = param.Object("service");// Our docker images are based on alpineconst baseImage = "alpine:3.8";// Dockerfile is a function generating a Dockerfile from a service object.const Dockerfile = (service) => `FROM ${baseImage}EXPOSE ${service.port}COPY ${service.name} /ENTRYPOINT /${service.name}`;// Instruct generate to produce a Dockerfile with the value returned by the// Dockerfile function.export default [{ path: "Dockerfile", value: Dockerfile(input) }];

Tools

A tool for exploring a docker image, layer contents, and discovering ways to shrink the size of your Docker/OCI image.

docker-slim will optimize and secure your containers by understanding your application and what it needs using various analysis techniques. It will throw away what you don't need, reducing the attack surface of your container. What if you need some of those extra things to debug your container? You can use dedicated debugging side-car containers for that (more details below).

References

- Docker for beginners: Learn to build and deploy your distributed applications easily to the cloud with Docker

- Getting Started with Docker: DockerLabs provides over 500 interactive tutorials and guides for developers of all levels, covering topics such as Docker security, networking, and AI/ML.

- How to clean your Docker data: Docker makes no configuration changes to your system … but it can use a significant volume of disk space. Fortunately, Docker allows you to reclaim disk space from unused images, containers, and volumes.

- Dock Life: Using Docker for All The Things!: Embracing Docker for All The Things gives you a more flexible, robust, and transportable way to use tools on your computer without messy setup

# Docker aliasesalias composer='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer 'alias composer1='docker run --rm -it -v "$PWD":/app -v ${COMPOSER_HOME:-$HOME/.composer}:/tmp composer:1 'alias node='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine 'alias node14='docker run --rm -it -v "$PWD":/app -w /app node:14-alpine 'alias node12='docker run --rm -it -v "$PWD":/app -w /app node:12-alpine 'alias node10='docker run --rm -it -v "$PWD":/app -w /app node:10-alpine 'alias npm='docker run --rm -it -v "$PWD":/app -w /app node:16-alpine npm 'alias deno='docker run --rm -it -v "$PWD":/app -w /app denoland/deno 'alias aws='docker run --rm -it -v ~/.aws:/root/.aws amazon/aws-cli 'alias ffmpeg='docker run --rm -it -v "$PWD":/app -w /app jrottenberg/ffmpeg 'alias yo='docker run --rm -it -v "$PWD":/app nystudio107/node-yeoman:16-alpine 'alias tree='f(){ docker run --rm -it -v "$PWD":/app johnfmorton/tree-cli tree "$@"; unset -f f; }; f'

This blog series will cover five areas for Dockerfile best practices to help you write better Dockerfiles: incremental build time, image size, maintainability, security and repeatability.

This article provides production-grade guidelines for building optimized and secure Node.js Docker images.

This full-length Docker book is rich with code examples. It will teach you all about containerization, custom Docker images and online registries, and how to work with multiple containers using Docker Compose.

The purpose of this guide is to explain the most important concepts related to Docker to be able to effectively work with Docker for application development purposes.

A step-by-step introduction to how the official Python Docker image is made, and a detailed interpretation of the Dockefile file.

Container security is a broad problem space and there are many low hanging fruits one can harvest to mitigate risks. A good starting point is to follow some rules when writing Dockerfiles.

Examples

# Start from golang base imageFROM golang:1.13-alpine as builder# Set the current working directory inside the containerWORKDIR /build# Copy go.mod, go.sum files and download depsCOPY go.mod go.sum ./RUN go mod download# Copy sources to the working directoryCOPY . .# Build the Go appARG projectRUN GOOS=linux CGO_ENABLED=0 GOARCH=amd64 go build -a -v -o server $project# Start a new stage from busyboxFROM busybox:latestWORKDIR /dist# Copy the build artifacts from the previous stageCOPY --from=builder /build/server .# Run the executableCMD ["./server"]

.dockerignore

Use .dockerignore in every project, where you are building Docker images. It will make your Docker images small, fast and secure. It will help with the Docker cache during local development as well.

# Items that don't need to be in a Docker image.# Anything not used by the build system should go here.# overheadDockerfiledocker-compose.yml.dockerignoredocs.vscode.gitignore.git.github.cacheLICENSE*.md.storybook.codeship*codeship*.ymlcodeship.aes.env.development.env.local# artifactsbuilddistnode_modules